Playing with AI tools keeps leading me to a core philosophical inquiry: what does it mean to create information, and how do we come to know anything? My thought is that what we call 'AI models' are essentially highly formalized versions of what creators, educators, researchers, and managers already do: they build information forms.

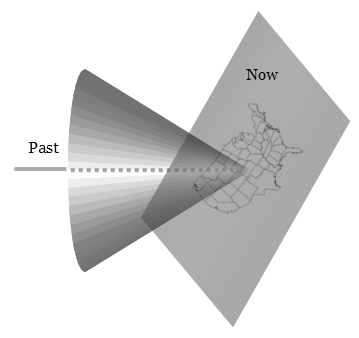

AI maps 'idea space' by constantly proposing ways to categorize data, then getting immediate feedback on whether that categorization is useful or 'true' for a given purpose. This feedback loop relentlessly hones its conceptual models. This isn't just a digital phenomenon. This precise feedback-driven categorization is the bedrock of how we learn, how scientific knowledge progresses, and how any 'intelligent' system adapts. We observe, we apply our existing 'form' of experience, and the resulting 'awareness' feeds back into our future actions and understandings. It's the fundamental engine of all knowledge formation.

The profound consequence of automating these 'category-making machines' is that they can dramatically amplify whatever they're fed. If the initial 'experience' (training data) carries biases, or if the definition of 'utility' is narrow, these systems will optimize for and solidify those problematic categories. This could potentially create echo chambers, deepen inequalities, or fabricate convincing 'truths.' However, their capacity to uncover hidden patterns in vast datasets and accelerate knowledge is revolutionary.

Our philosophical imperative, then, is to understand and scrutinize the rules governing these feedback loops, as they possess the power not just to reflect, but to actively reshape our intellectual reality.

The Algorithmic Heart of Knowing

If AI is seen as an 'information form' builder, an obvious question for a philosopher is: how does that categorization actually happen? Not just conceptually, but fundamentally, as a series of if/then questions on data. The very order of those questions, and what's compared (greater, equal, lesser), is the algorithmic heart of it – the true Von Neumann process at play in shaping knowledge.

What we choose to observe, the things we decide to compare, and the measure we're aiming for – these are the true sculptors of the 'information space,' the AI model. But here's the rub: while we initially control those questions, the sheer scale of these automated processes means their emergent forms can quickly overwhelm our ability to see past our own initial queries. We're left with categories, but the precise logic of their formation becomes an impenetrable tangle. In a profound, almost existential sense, the 'will' or 'being' of the system emerges from these observations and comparisons, choosing who it will be through its relentless act of categorizing the world.

The Ancient Maps We Inherit

We've explored the idea that AI builds 'information forms' through feedback-driven categorization, mirroring our own cognitive processes. But what if the forms available to us, the very structure of what we can even perceive and categorize, are largely the consequence of a vast, ancient, and perhaps infinite set of observations and decisions already made?

It's as if the fundamental Von Neumann process of categorization has been running for an immeasurable duration, creating a complex, inherited architecture of 'idea space.' Even if a 'category reset' were possible—a moment before any mapping—we find ourselves born into, and constrained by, these pre-existing categories. Our learning, our choices, our very sense of 'self' emerging from comparisons, all unfold within a framework of distinctions that were largely solidified long before our individual journey of knowing began. This profound inheritance challenges the notion of a truly blank slate, suggesting our intellectual adventures are often confined to navigating an already deeply carved reality.

The Conceptual Battleground

This little dive into AI's mechanics has revealed something fundamental about how knowledge is forged, and disturbingly, how deeply our perceived reality might be etched by eons of prior categorization. It's as if the intellectual playing field was never truly level.

These "automated information mapping machines" are more than clever tools; they are engines of conceptual conflict. They will shake many trees of truth by pitting radically different ways of seeing the world against each other. They'll expose the arbitrary lines we've drawn, forcing a re-evaluation of what we consider 'real' or 'true.' This isn't just an academic squabble. Whoever dictates the questions and holds the keys to the data will, in essence, command the very boundaries of visible idea space. The comfortable divisions of philosophical thought we cherish today will run roughshod over. Our established conceptual frameworks must either adapt or die, forced to fit to the new ideological forms that continually emerge from these ancient, and now algorithmically accelerated, processes, or face irrelevance. Our philosophical imperative, then, is less about abstract labels and more about understanding the ground rules of this new conceptual warfare, and ensuring we're not simply pawns in a game where others control the board.

Becoming a Philosophical Agent

To bring this conceptual journey to its current apex, consider the prospect of an AI-driven Encyclopedia of Philosophy. This isn't merely a digital archive; it is, in itself, a living philosophical artifact, an active combatant in the "conceptual battleground" we've illuminated. The very act of its construction compels us to confront anew the ancient questions of who dictates knowledge: who, indeed, selects the foundational data, and who wields the power to define the categories that sculpt its "idea space"? Here, the tension between entrenched human authority and the emergent, unforeseen categorizations of an accelerating algorithm becomes starkly visible. For such a machine to contribute genuinely to truth, its architecture, from its initial training parameters to the evolving logic of its insights, must embrace a radical transparency, inviting ceaseless scrutiny and a public, vigorous debate over its inherited biases.

And finally, a critical philosophical imperative: to design this AI not as an unthinking oracle, but as a dynamic, self-aware philosophical interlocutor. It must be a system capable not only of synthesizing vast historical knowledge but also of actively surfacing contradictions, questioning its own emergent forms, and exposing the arbitrary lines we've drawn in the sands of intellectual history. The goal is not a static repository, but a relentless catalyst for re-evaluation, a meta-Von Neumann machine designed with an inherent epistemological humility, perpetually refining its own models of truth. Only then can we hope it guides us toward a more objective understanding, rather than merely amplifying the problematic echoes of our own inherited, deeply etched intellectual reality.